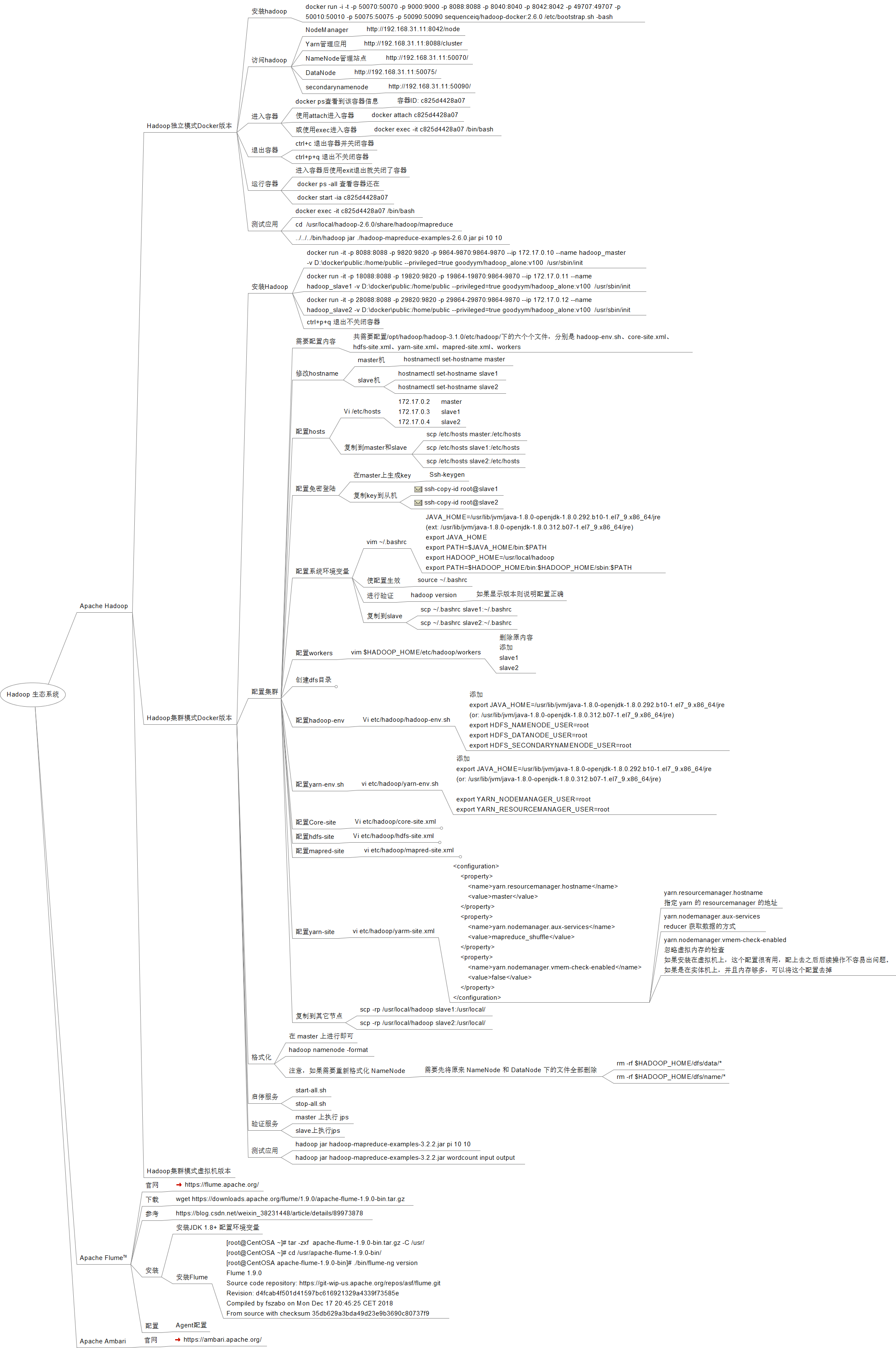

Apache Hadoop

Hadoop集群模式Docker版本

安装Hadoop

docker run -it -p 8088:8088 -p 9820:9820 -p 9864-9870:9864-9870 --ip 172.17.0.10 --name hadoop_master -v D:\docker\public:/home/public --privileged=true goodyym/hadoop_alone:v100 /usr/sbin/init

docker run -it -p 18088:8088 -p 19820:9820 -p 19864-19870:9864-9870 --ip 172.17.0.11 --name hadoop_slave1 -v D:\docker\public:/home/public --privileged=true goodyym/hadoop_alone:v100 /usr/sbin/init

配置集群

配置Core-site

配置hdfs-site

Vi etc/hadoop/hdfs-site.xml

<property>

<name>dfs.namenode.secondary.http-address</name>

<value>master:9868</value>

</property>

<property>

<name>dfs.datanode.address</name>

<value>master:9866</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/usr/local/hadoop/dfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/usr/local/hadoop/dfs/data</value>

</property>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<name>dfs.namenode.secondary.http-address</name>

<value>master:9868</value>

</property>

<property>

<name>dfs.datanode.address</name>

<value>master:9866</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/usr/local/hadoop/dfs/name</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/usr/local/hadoop/dfs/data</value>

</property>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

配置mapred-site

vi etc/hadoop/mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.application.classpath</name>

<value>$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/*:$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/lib/*</value>

</property>

<property>

<name>yarn.app.mapreduce.am.env</name>

<value>HADOOP_MAPRED_HOME=/usr/local/hadoop</value>

</property>

<property>

<name>mapreduce.map.env</name>

<value>HADOOP_MAPRED_HOME=/usr/local/hadoop</value>

</property>

<property>

<name>mapreduce.reduce.env</name>

<value>HADOOP_MAPRED_HOME=/usr/local/hadoop</value>

</property>

</configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.application.classpath</name>

<value>$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/*:$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/lib/*</value>

</property>

<property>

<name>yarn.app.mapreduce.am.env</name>

<value>HADOOP_MAPRED_HOME=/usr/local/hadoop</value>

</property>

<property>

<name>mapreduce.map.env</name>

<value>HADOOP_MAPRED_HOME=/usr/local/hadoop</value>

</property>

<property>

<name>mapreduce.reduce.env</name>

<value>HADOOP_MAPRED_HOME=/usr/local/hadoop</value>

</property>

</configuration>

配置yarn-site

vi etc/hadoop/yarm-site.xml

<configuration>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>master</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>

</configuration>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>master</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.vmem-check-enabled</name>

<value>false</value>

</property>

</configuration>

Apache Flume™

安装

安装Flume

[root@CentOSA ~]# tar -zxf apache-flume-1.9.0-bin.tar.gz -C /usr/

[root@CentOSA ~]# cd /usr/apache-flume-1.9.0-bin/

[root@CentOSA apache-flume-1.9.0-bin]# ./bin/flume-ng version

Flume 1.9.0

Source code repository: https://git-wip-us.apache.org/repos/asf/flume.git

Revision: d4fcab4f501d41597bc616921329a4339f73585e

Compiled by fszabo on Mon Dec 17 20:45:25 CET 2018

From source with checksum 35db629a3bda49d23e9b3690c80737f9

[root@CentOSA ~]# cd /usr/apache-flume-1.9.0-bin/

[root@CentOSA apache-flume-1.9.0-bin]# ./bin/flume-ng version

Flume 1.9.0

Source code repository: https://git-wip-us.apache.org/repos/asf/flume.git

Revision: d4fcab4f501d41597bc616921329a4339f73585e

Compiled by fszabo on Mon Dec 17 20:45:25 CET 2018

From source with checksum 35db629a3bda49d23e9b3690c80737f9