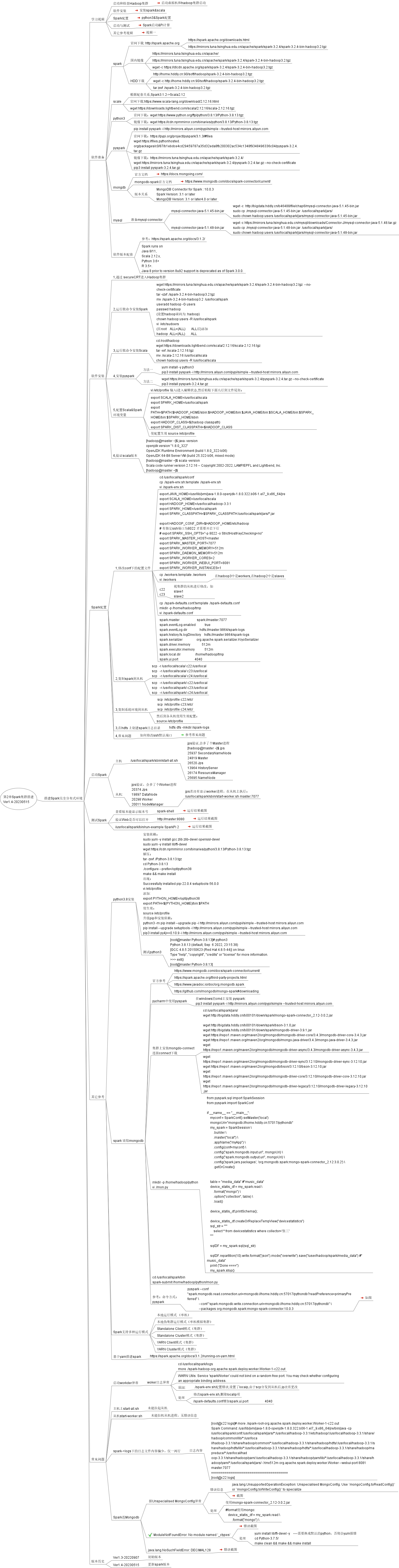

搭建Spark完全分布式环境

软件准备

pyspark

软件安装

2,运行批命令安装Spark

wget https://mirrors.tuna.tsinghua.edu.cn/apache/spark/spark-3.2.4/spark-3.2.4-bin-hadoop3.2.tgz --no-check-certificate

tar -xzvf ./spark-3.2.4-bin-hadoop3.2.tgz

mv ./spark-3.2.4-bin-hadoop3.2 /usr/local/spark

useradd hadoop -G users

passwd hadoop

(设置hadoop密码为: hadoop)

chown hadoop:users -R /usr/local/spark

vi /etc/sudoers

(在root ALL=(ALL) ALL后)添加

hadoop ALL=(ALL) ALL

tar -xzvf ./spark-3.2.4-bin-hadoop3.2.tgz

mv ./spark-3.2.4-bin-hadoop3.2 /usr/local/spark

useradd hadoop -G users

passwd hadoop

(设置hadoop密码为: hadoop)

chown hadoop:users -R /usr/local/spark

vi /etc/sudoers

(在root ALL=(ALL) ALL后)添加

hadoop ALL=(ALL) ALL

5,配置Scala&Spark

环境变量

环境变量

6,验证scala版本

[hadoop@master ~]$ java -version

openjdk version "1.8.0_322"

OpenJDK Runtime Environment (build 1.8.0_322-b06)

OpenJDK 64-Bit Server VM (build 25.322-b06, mixed mode)

[hadoop@master ~]$ scala -version

Scala code runner version 2.12.16 -- Copyright 2002-2022, LAMP/EPFL and Lightbend, Inc.

[hadoop@master ~]$

openjdk version "1.8.0_322"

OpenJDK Runtime Environment (build 1.8.0_322-b06)

OpenJDK 64-Bit Server VM (build 25.322-b06, mixed mode)

[hadoop@master ~]$ scala -version

Scala code runner version 2.12.16 -- Copyright 2002-2022, LAMP/EPFL and Lightbend, Inc.

[hadoop@master ~]$

Spark配置

1,修改conf下的配置文件

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.322.b06-1.el7_9.x86_64/jre

export SCALA_HOME=/usr/local/scala

export HADOOP_HOME=/usr/local/hadoop-3.3.1

export SPARK_HOME=/usr/local/spark

export SPARK_CLASSPATH=$SPARK_CLASSPATH:/usr/local/spark/jars/*.jar

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

# 有指定ssh端口为8022 才需要开启下行

# export SPARK_SSH_OPTS="-p 8022 -o StrictHostKeyChecking=no"

export SPARK_MASTER_HOST=master

export SPARK_MASTER_PORT=7077

export SPARK_WORKER_MEMORY=512m

export SPARK_DAEMON_MEMORY=512m

export SPARK_WORKER_CORES=2

export SPARK_WORKER_WEBUI_PORT=8081

export SPARK_WORKER_INSTANCES=1

export SCALA_HOME=/usr/local/scala

export HADOOP_HOME=/usr/local/hadoop-3.3.1

export SPARK_HOME=/usr/local/spark

export SPARK_CLASSPATH=$SPARK_CLASSPATH:/usr/local/spark/jars/*.jar

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

# 有指定ssh端口为8022 才需要开启下行

# export SPARK_SSH_OPTS="-p 8022 -o StrictHostKeyChecking=no"

export SPARK_MASTER_HOST=master

export SPARK_MASTER_PORT=7077

export SPARK_WORKER_MEMORY=512m

export SPARK_DAEMON_MEMORY=512m

export SPARK_WORKER_CORES=2

export SPARK_WORKER_WEBUI_PORT=8081

export SPARK_WORKER_INSTANCES=1

cp ./spark-defaults.conf.template ./spark-defaults.conf

mkdir -p /home/hadoop/tmp

vi ./spark-defaults.conf

mkdir -p /home/hadoop/tmp

vi ./spark-defaults.conf

spark.master spark://master:7077

spark.eventLog.enabled true

spark.eventLog.dir hdfs://master:9864/spark-logs

spark.history.fs.logDirectory hdfs://master:9864/spark-logs

spark.serializer org.apache.spark.serializer.KryoSerializer

spark.driver.memory 512m

spark.executor.memory 512m

spark.local.dir /home/hadoop/tmp

spark.ui.port 4040

spark.eventLog.enabled true

spark.eventLog.dir hdfs://master:9864/spark-logs

spark.history.fs.logDirectory hdfs://master:9864/spark-logs

spark.serializer org.apache.spark.serializer.KryoSerializer

spark.driver.memory 512m

spark.executor.memory 512m

spark.local.dir /home/hadoop/tmp

spark.ui.port 4040

其它参考

python3.8安装

安装依赖:

sudo yum -y install gcc zlib zlib-devel openssl-devel

sudo yum -y install libffi-devel

wget https://cdn.npmmirror.com/binaries/python/3.8.13/Python-3.8.13.tgz

解压:

tar -zxvf ./Python-3.8.13.tgz

cd Python-3.8.13

./configure --prefix=/opt/python38

make && make install

出现:

Successfully installed pip-22.0.4 setuptools-56.0.0

vi /etc/profile

添加:

export PYTHON_HOME=/opt/python38

export PATH=${PYTHON_HOME}/bin:$PATH

使生效:

source /etc/profile

升级pip和安装依赖:

python3 -m pip install --upgrade pip -i http://mirrors.aliyun.com/pypi/simple --trusted-host mirrors.aliyun.com

pip install --upgrade setuptools -i http://mirrors.aliyun.com/pypi/simple --trusted-host mirrors.aliyun.com

pip3 install py4j==0.10.9 -i http://mirrors.aliyun.com/pypi/simple --trusted-host mirrors.aliyun.com

sudo yum -y install gcc zlib zlib-devel openssl-devel

sudo yum -y install libffi-devel

wget https://cdn.npmmirror.com/binaries/python/3.8.13/Python-3.8.13.tgz

解压:

tar -zxvf ./Python-3.8.13.tgz

cd Python-3.8.13

./configure --prefix=/opt/python38

make && make install

出现:

Successfully installed pip-22.0.4 setuptools-56.0.0

vi /etc/profile

添加:

export PYTHON_HOME=/opt/python38

export PATH=${PYTHON_HOME}/bin:$PATH

使生效:

source /etc/profile

升级pip和安装依赖:

python3 -m pip install --upgrade pip -i http://mirrors.aliyun.com/pypi/simple --trusted-host mirrors.aliyun.com

pip install --upgrade setuptools -i http://mirrors.aliyun.com/pypi/simple --trusted-host mirrors.aliyun.com

pip3 install py4j==0.10.9 -i http://mirrors.aliyun.com/pypi/simple --trusted-host mirrors.aliyun.com

spark 读取mongodb

集群上安装mongdo-connect

连接connect下载

连接connect下载

cd /usr/local/spark/jars/

wget http://bigdata.hddly.cn/b00101/down/spark/mongo-spark-connector_2.12-3.0.2.jar

wget http://bigdata.hddly.cn/b00101/down/spark/bson-3.1.0.jar

wget http://bigdata.hddly.cn/b00101/down/spark/mongodb-driver-3.9.1.jar

wget https://repo1.maven.org/maven2/org/mongodb/mongodb-driver-core/3.4.3/mongodb-driver-core-3.4.3.jar

wget https://repo1.maven.org/maven2/org/mongodb/mongo-java-driver/3.4.3/mongo-java-driver-3.4.3.jar

wget https://repo1.maven.org/maven2/org/mongodb/mongodb-driver-async/3.4.3/mongodb-driver-async-3.4.3.jar

wget http://bigdata.hddly.cn/b00101/down/spark/mongo-spark-connector_2.12-3.0.2.jar

wget http://bigdata.hddly.cn/b00101/down/spark/bson-3.1.0.jar

wget http://bigdata.hddly.cn/b00101/down/spark/mongodb-driver-3.9.1.jar

wget https://repo1.maven.org/maven2/org/mongodb/mongodb-driver-core/3.4.3/mongodb-driver-core-3.4.3.jar

wget https://repo1.maven.org/maven2/org/mongodb/mongo-java-driver/3.4.3/mongo-java-driver-3.4.3.jar

wget https://repo1.maven.org/maven2/org/mongodb/mongodb-driver-async/3.4.3/mongodb-driver-async-3.4.3.jar

wget https://repo1.maven.org/maven2/org/mongodb/mongodb-driver-sync/3.12.10/mongodb-driver-sync-3.12.10.jar

wget https://repo1.maven.org/maven2/org/mongodb/bson/3.12.10/bson-3.12.10.jar

wget https://repo1.maven.org/maven2/org/mongodb/mongodb-driver-core/3.12.10/mongodb-driver-core-3.12.10.jar

wget https://repo1.maven.org/maven2/org/mongodb/mongodb-driver-legacy/3.12.10/mongodb-driver-legacy-3.12.10.jar

wget https://repo1.maven.org/maven2/org/mongodb/bson/3.12.10/bson-3.12.10.jar

wget https://repo1.maven.org/maven2/org/mongodb/mongodb-driver-core/3.12.10/mongodb-driver-core-3.12.10.jar

wget https://repo1.maven.org/maven2/org/mongodb/mongodb-driver-legacy/3.12.10/mongodb-driver-legacy-3.12.10.jar

mkdir -p /home/hadoop/python

vi ./mon.py

vi ./mon.py

from pyspark.sql import SparkSession

from pyspark import SparkConf

if __name__ == "__main__":

myconf = SparkConf().setMaster('local')

mongoUri="mongodb://home.hddly.cn:57017/pythondb"

my_spark = SparkSession \

.builder \

.master("local") \

.appName("myApp") \

.config(conf=myconf) \

.config("spark.mongodb.input.uri", mongoUri) \

.config("spark.mongodb.output.uri", mongoUri) \

.config('spark.jars.packages', 'org.mongodb.spark:mongo-spark-connector_2.12:3.0.2') \

.getOrCreate()

table = "media_data" #"music_data"

device_statis_df = my_spark.read \

.format("mongo") \

.option("collection", table) \

.load()

device_statis_df.printSchema();

device_statis_df.createOrReplaceTempView("devicestatistics")

sql_str = """

select * from devicestatistics where collector='张三'

"""

sqlDF = my_spark.sql(sql_str)

sqlDF.repartition(10).write.format("json").mode("overwrite").save("/user/hadoop/spark/media_data") #"music_data"

print ("Done ====")

my_spark.stop()

from pyspark import SparkConf

if __name__ == "__main__":

myconf = SparkConf().setMaster('local')

mongoUri="mongodb://home.hddly.cn:57017/pythondb"

my_spark = SparkSession \

.builder \

.master("local") \

.appName("myApp") \

.config(conf=myconf) \

.config("spark.mongodb.input.uri", mongoUri) \

.config("spark.mongodb.output.uri", mongoUri) \

.config('spark.jars.packages', 'org.mongodb.spark:mongo-spark-connector_2.12:3.0.2') \

.getOrCreate()

table = "media_data" #"music_data"

device_statis_df = my_spark.read \

.format("mongo") \

.option("collection", table) \

.load()

device_statis_df.printSchema();

device_statis_df.createOrReplaceTempView("devicestatistics")

sql_str = """

select * from devicestatistics where collector='张三'

"""

sqlDF = my_spark.sql(sql_str)

sqlDF.repartition(10).write.format("json").mode("overwrite").save("/user/hadoop/spark/media_data") #"music_data"

print ("Done ====")

my_spark.stop()

常见问题

启动workder异常

spark->logs下的日志文件内容偏少,仅一两行

日志内容

[root@c22 logs]# more ./spark-root-org.apache.spark.deploy.worker.Worker-1-c22.out

Spark Command: /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.322.b06-1.el7_9.x86_64/jre/bin/java -cp /usr/local/spark/conf/:/usr/local/spark/jars/*:/usr/local/hadoop-3.3.1/etc/hadoop/:/usr/local/hadoop-3.3.1/share/hadoop/common/lib/*:/usr/loca

l/hadoop-3.3.1/share/hadoop/common/*:/usr/local/hadoop-3.3.1/share/hadoop/hdfs/:/usr/local/hadoop-3.3.1/share/hadoop/hdfs/lib/*:/usr/local/hadoop-3.3.1/share/hadoop/hdfs/*:/usr/local/hadoop-3.3.1/share/hadoop/mapreduce/*:/usr/local/had

oop-3.3.1/share/hadoop/yarn/:/usr/local/hadoop-3.3.1/share/hadoop/yarn/lib/*:/usr/local/hadoop-3.3.1/share/hadoop/yarn/*:/usr/local/spark/jars/ -Xmx512m org.apache.spark.deploy.worker.Worker --webui-port 8081 master:7077

========================================

[root@c22 logs]

Spark Command: /usr/lib/jvm/java-1.8.0-openjdk-1.8.0.322.b06-1.el7_9.x86_64/jre/bin/java -cp /usr/local/spark/conf/:/usr/local/spark/jars/*:/usr/local/hadoop-3.3.1/etc/hadoop/:/usr/local/hadoop-3.3.1/share/hadoop/common/lib/*:/usr/loca

l/hadoop-3.3.1/share/hadoop/common/*:/usr/local/hadoop-3.3.1/share/hadoop/hdfs/:/usr/local/hadoop-3.3.1/share/hadoop/hdfs/lib/*:/usr/local/hadoop-3.3.1/share/hadoop/hdfs/*:/usr/local/hadoop-3.3.1/share/hadoop/mapreduce/*:/usr/local/had

oop-3.3.1/share/hadoop/yarn/:/usr/local/hadoop-3.3.1/share/hadoop/yarn/lib/*:/usr/local/hadoop-3.3.1/share/hadoop/yarn/*:/usr/local/spark/jars/ -Xmx512m org.apache.spark.deploy.worker.Worker --webui-port 8081 master:7077

========================================

[root@c22 logs]